AI agents are now being embedded across core business functions globally. Soon, these agents could be scheduling our lives, making key decisions, and negotiating deals on our behalf. The prospect is exciting and ambitious, but it also begs the question: who’s actually supervising them?

Over half (51%) of companies have deployed AI agents, and Salesforce CEO Marc Benioff has targeted a billion agents by the end of the year. Despite their growing influence, verification testing is notably absent. These agents are being entrusted with critical responsibilities in sensitive sectors, such as banking and healthcare, without proper oversight.

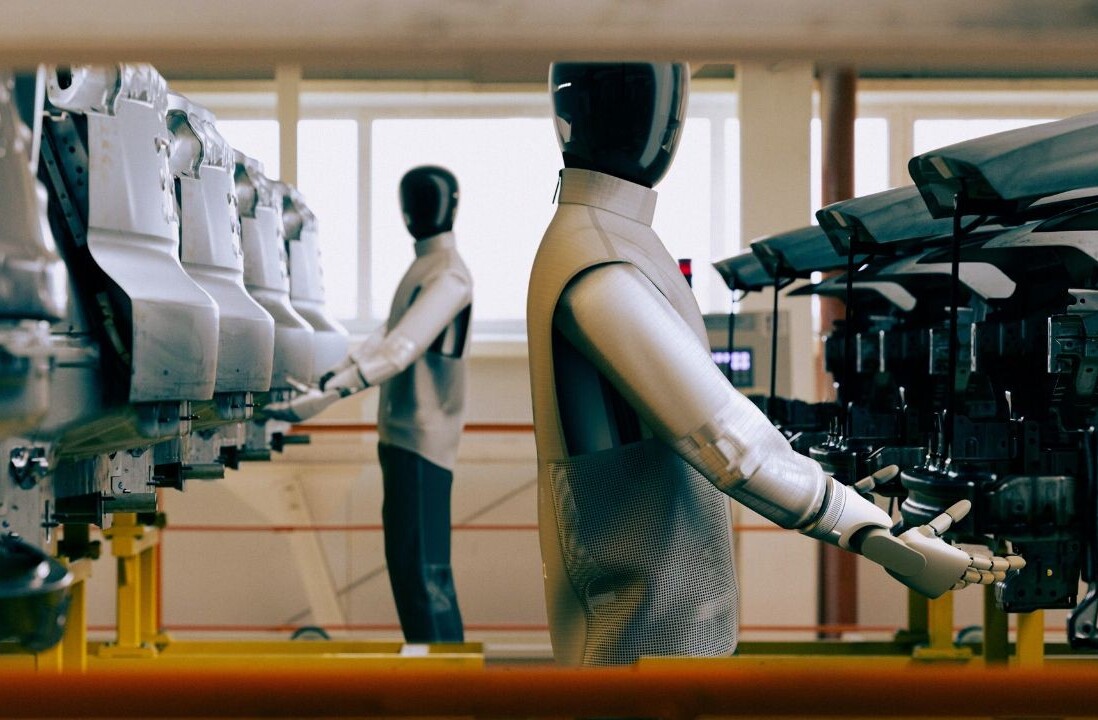

AI agents require clear programming, high-quality training, and real-time insights to efficiently and accurately carry out goal-oriented actions. However, not all agents will be created equal. Some agents may receive more advanced data and training, leading to an imbalance between bespoke, well-trained agents and mass-produced ones.

This could pose a systemic risk where more advanced agents manipulate and deceive less advanced agents. Over time, this divide between agents could create a gap in outcomes. Let’s say one agent has more experience in legal processes and uses that knowledge to exploit or outmanoeuvre another agent with less understanding. The deployment of AI agents by enterprises is inevitable, and so is the emergence of new power structures and manipulation risks. The underlying models will be the same for all users, but this possibility of divergence needs monitoring.

Unlike traditional software, AI agents operate in evolving, complex settings. Their adaptability makes them powerful, yet also more prone to unexpected and potentially catastrophic failures.

For instance, an AI agent might misdiagnose a critical condition in a child because it was trained mostly on data from adult patients. Or an AI agent chatbot could escalate a harmless customer complaint because it misinterprets sarcasm as aggression, slowly losing customers and revenue due to misinterpretation.

According to industry research, 80% of firms have disclosed that their AI agents have made “rogue” decisions. Alignment and safety issues are already evident in real-world examples, such as autonomous agents overstepping clear instructions and deleting important pieces of work.

Typically, when major human error occurs, the employee must deal with HR, may be suspended, and a formal investigation is carried out. With AI agents, those guardrails aren’t in place. We give them human-level access to sensitive materials without anything close to human-level oversight.

So, are we advancing our systems through the use of AI agents, or are we surrendering agency before the proper protocols are in place?

The truth is, these agents may be quick to learn and adapt according to their respective environments, but they are not yet responsible adults. They haven’t experienced years and years of learning, trying and failing, and interacting with other businesspeople. They lack the maturity acquired from lived experience. Giving them autonomy with minimal checks is like handing the company keys to an intoxicated graduate. They are enthusiastic, intelligent, and malleable, but also erratic and in need of supervision.

And yet, what large enterprises are failing to recognise is that this is exactly what they are doing. AI agents are being “seamlessly” plugged into operations with little more than a demo and a disclaimer. No continuous and standardised testing. No clear exit strategy when something goes wrong.

What’s missing is a structured, multi-layered verification framework — one that regularly tests agent behaviour in simulations of real-world and high-stakes scenarios. As adoption accelerates, verification is becoming a prerequisite to ensure AI agents are fit for purpose.

Different levels of verification are required according to the sophistication of the agent. Simple knowledge extraction agents, or those trained to use tools like Excel or email, may not require the same rigour of testing as sophisticated agents that replicate a wide range of tasks humans perform. However, we need to have appropriate guardrails in place, especially in demanding environments where agents work in collaboration with both humans and other agents.

When agents start making decisions at scale, the margin for error shrinks rapidly. If the AI agents we are letting control critical operations fail to be tested for integrity, accuracy, and safety, we risk enabling AI agents to wreak havoc on society. The consequences will be very real — and the cost of damage control could be staggering.

Get the TNW newsletter

Get the most important tech news in your inbox each week.